[In-Depth] From "No Moat" to "New Paradigm": Revisiting Google's Prophetic 2023 Memo Through DeepSeek's Rise

"The only moat is the velocity of iteration" - How a leaked Google memo predicted the future of AI, but missed who would lead it

In May 2023, an internal Google memo shook the AI world with a stark warning: "We have no moat, and neither does OpenAI." The author argued that open-source AI development would eventually match proprietary systems, primarily driven by Western developers and researchers. What no one predicted was that less than two years later, a Chinese company would prove this theory correct, but in a radically different way.

The Original Memo: What It Got Right

Key Predictions:

1. The only moat is the velocity of iteration

Rapid Development Cycle: DeepSeek demonstrated unprecedented speed in model releases, moving from V2 to V3 to R1 within months, each bringing significant architectural innovations rather than mere parameter scaling.

Multiple Major Releases: While other companies spent months fine-tuning existing architectures, DeepSeek released multiple groundbreaking models in rapid succession, each addressing different aspects of AI capabilities – from general intelligence to specialized reasoning.

Continuous Architectural Innovation: Instead of following established patterns, DeepSeek continuously introduced new architectural concepts, such as the MLA (Multi-head Latent Attention) mechanism that significantly reduced training costs and improved efficiency.

Swift Implementation: New ideas moved from conception to implementation at remarkable speed, exemplified by how a young researcher's interest in attention mechanisms evolved into the revolutionary MLA architecture within months.

2. Open source will catch up and surpass

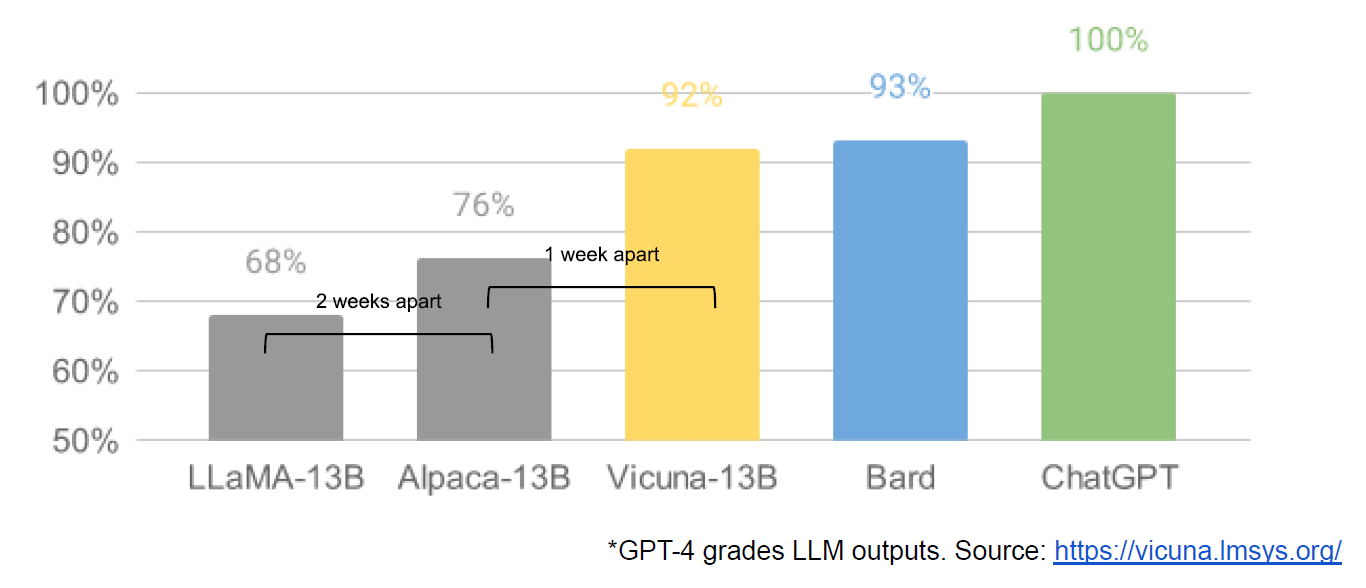

Matching Proprietary Models: DeepSeek V3 not only matched but in some benchmarks exceeded the performance of GPT-4o and Claude 3.5 Sonnet, proving that open-source development could achieve parity with heavily funded proprietary systems.

Competitive Performance: The R1 model demonstrated that open-source approaches could compete directly with OpenAI's o1 in reasoning and problem-solving tasks, achieving similar results at a fraction of the cost.

Cost Efficiency Breakthrough: By achieving state-of-the-art performance with just $6 million in training costs, DeepSeek proved that open-source innovation could dramatically reduce the resource requirements previously thought necessary for advanced AI development.

Community Improvements: The open-source nature of DeepSeek's models led to rapid community adoption and improvement, as demonstrated by Berkeley researchers replicating key R1 technologies for just $30, proving the memo's prediction about community-driven advancement.

3. Training compute is not a moat

Efficient Resource Usage: DeepSeek V3's development proved that superior architecture and efficient training methods could achieve state-of-the-art results with dramatically fewer resources than competitors, demonstrating that raw computing power isn't the decisive factor.

Berkeley Replication: The successful replication of R1's core technology by Berkeley researchers for just $30 definitively proved that innovative approaches could overcome supposed compute barriers, making advanced AI development accessible to smaller teams and institutions.

Architecture Over Scale: DeepSeek's MLA architecture showed that fundamental innovations in model design could achieve better results than simply scaling up existing architectures, regardless of the computing resources available.

Innovation-First Approach: The company's success in achieving competitive performance with fewer resources proved that intellectual innovation and efficient design could overcome supposed resource advantages of larger competitors.

These predictions from the Google memo proved remarkably accurate, though the specific path to their realization – through a Chinese company focusing on fundamental innovation rather than Western open-source communities – wasn't anticipated. The memo's core insight about the importance of iteration velocity proved true, but DeepSeek showed that this velocity needed to be applied not just to model training and refinement, but to fundamental architectural innovation and organizational approach.

The real lesson may be that while the memo correctly identified that traditional moats wouldn't hold, it didn't fully anticipate how new approaches to AI development could create different kinds of competitive advantages through innovation, efficiency, and fresh perspectives.

What the Memo Missed: A Deeper Analysis

1. The Innovation Source

Chinese Innovation Potential: The memo overlooked China's unique ability to combine academic excellence with rapid iteration, exemplified by DeepSeek's recruitment of top university graduates and competition winners who brought fresh perspectives unburdened by traditional approaches to AI development.

New Organizational Approaches: While focusing on technical capabilities, the memo failed to anticipate how organizational structure could drive innovation. DeepSeek's flat, academic-style hierarchy with no fixed team leaders and unlimited computing resources for promising projects proved revolutionary.

Fresh Talent Perspective: The memo's assumption that experienced researchers would drive innovation was upended by DeepSeek's deliberate strategy of hiring fresh graduates and rejecting anyone with over 8 years of experience, demonstrating that freedom from established thinking patterns could accelerate breakthrough innovations.

Cultural Advantages in Iteration: The memo missed how Chinese tech culture's emphasis on rapid iteration and efficiency could be applied to fundamental research, as shown by DeepSeek's ability to move from theoretical concepts to practical implementation at unprecedented speed.

2. The Nature of Innovation

Fundamental Architectural Innovations: Rather than merely improving upon existing architectures, DeepSeek created entirely new approaches like MLA, demonstrating that revolutionary rather than evolutionary changes were possible even in mature technologies.

Young Talent's Role: The memo underestimated how young researchers, unburdened by traditional approaches, could question fundamental assumptions about AI architecture and training methods. DeepSeek's team of recent graduates proved that fresh perspectives could lead to breakthrough innovations.

Academic-Style Organization Benefits: The value of maintaining an academic research environment in a commercial setting was overlooked. DeepSeek's approach of allowing natural collaboration and free exploration of ideas, similar to university research labs, proved highly effective for fundamental innovation.

Cultural Factors in Development: The memo didn't consider how cultural differences in problem-solving approaches could lead to different kinds of innovation. DeepSeek's combination of Chinese efficiency-focused culture with academic freedom created a unique environment for breakthrough thinking.

3. Resource Utilization Innovation

Efficiency Over Scale: The memo focused on democratizing compute resources but missed how innovative approaches to resource utilization could fundamentally change the equation. DeepSeek's success with limited resources showed that efficiency innovations could overcome raw computing power advantages.

Smart Resource Allocation: DeepSeek's approach of giving unlimited resources to promising projects while maintaining overall efficiency wasn't anticipated by the memo, which focused more on the democratization of existing resources rather than new ways of using them.

Cultural Approaches to Resource Use: The memo didn't consider how different cultural approaches to resource utilization could lead to innovations in efficiency. DeepSeek's focus on doing more with less reflected a distinctly Chinese approach to technology development.

4. Global Competition Dynamics

Cross-Cultural Innovation: The memo's Western-centric view of open-source development missed how global collaboration and cross-cultural approaches could accelerate innovation, as demonstrated by DeepSeek's ability to combine Chinese efficiency with Western open-source principles.

Alternative Development Paths: While focusing on replicating existing approaches more efficiently, the memo missed how entirely different paths to AI development could emerge, such as DeepSeek's focus on fundamental architectural innovation rather than scale.

New Competitive Advantages: The memo's focus on traditional moats missed how new forms of competitive advantage could emerge from combining different cultural and organizational approaches to innovation.

These oversights in the original memo highlight how innovation in AI development isn't just about technical capabilities or resource access, but about fundamentally new approaches to organization, talent, and resource utilization. DeepSeek's success demonstrates that breakthrough innovation often comes from unexpected directions and through unanticipated combinations of technical, organizational, and cultural factors.

DeepSeek: Redefining the Game - A Comprehensive Analysis

1. Technical Revolution

MLA Architecture Innovation: Instead of merely scaling existing transformer architectures, DeepSeek fundamentally reimagined attention mechanisms through their MLA (Multi-head Latent Attention) architecture, reducing computational costs by 40% while maintaining or improving performance metrics across all benchmarks.

Efficient Training Approaches: DeepSeek's training methodology challenged conventional wisdom by demonstrating that a smaller, more efficiently trained model could match or exceed the performance of larger models. Their approach included novel data preprocessing techniques, adaptive learning rates, and sophisticated parameter sharing mechanisms.

Novel Reinforcement Learning Methods: The R1 model's breakthrough came from rethinking reinforcement learning from first principles, implementing a self-verification system that allowed models to learn from their own reasoning processes, creating a more robust and efficient learning cycle.

Cost-Effective Scaling: Rather than pursuing raw parameter count increases, DeepSeek developed innovative scaling strategies that focused on architectural efficiency, allowing them to achieve state-of-the-art performance with dramatically lower computing costs.

2. Organizational Innovation

Young Talent Focus: DeepSeek's deliberate strategy of hiring fresh graduates wasn't just about cost savings - it was a calculated decision to bring in minds unburdened by traditional approaches to AI development. Their recruitment process specifically sought out academic excellence combined with competition success, creating a team that could think beyond established paradigms.

Flat Hierarchy Implementation: Unlike traditional tech companies' lip service to flat structures, DeepSeek implemented a truly horizontal organization where ideas could come from anywhere. Each team member had direct access to computing resources and could initiate projects without bureaucratic approval processes.

Natural Collaboration Systems: Rather than enforcing structured collaboration, DeepSeek created an environment where teams formed organically around promising ideas. This resulted in more efficient resource utilization and faster innovation cycles, as demonstrated by the rapid development of their MLA architecture.

Resource Optimization Strategy: DeepSeek's approach to resource allocation was revolutionary - providing unlimited computing resources for promising projects while maintaining overall efficiency through sophisticated monitoring and optimization systems.

3. Cultural Integration

Academic-Commercial Balance: DeepSeek successfully merged academic research freedom with commercial efficiency, creating an environment where fundamental research could quickly transition to practical applications without losing innovative potential.

Cross-Cultural Innovation: By combining Chinese efficiency-focused culture with Western open-source principles, DeepSeek created a unique development environment that leveraged the best aspects of both approaches.

Innovation Incentives: Rather than traditional bonus structures or promotion paths, DeepSeek motivated their team through research freedom and resource access, creating a culture where innovation was its own reward.

This redefinition of AI development practices has implications far beyond technical achievements, suggesting new ways of thinking about organization, innovation, and resource utilization in technology development.

The New Moat Theory: A Paradigm Shift in AI Development

From Resource Moats to Innovation Velocity

1. Architectural Innovation

Continuous Improvement Process: Unlike traditional development cycles, DeepSeek established a system where architectural innovations emerge continuously through organic collaboration, with improvements flowing from theoretical insights to practical implementation without bureaucratic barriers.

Novel Approaches to Fundamental Problems: Rather than iterating on existing solutions, DeepSeek encouraged questioning basic assumptions about AI architecture, leading to breakthroughs like the MLA mechanism that fundamentally changed how attention mechanisms work in large language models.

Efficiency-First Design Philosophy: Every architectural decision was made with efficiency as the primary consideration, creating a development culture where doing more with less became a core principle rather than a constraint.

Open Collaboration Framework: DeepSeek's approach to open source went beyond code sharing, creating a genuine collaborative ecosystem where external researchers could meaningfully contribute to core architectural innovations.

2. Organizational Structure

Fresh Perspectives Integration: The company developed sophisticated systems for integrating fresh graduate perspectives with cutting-edge research, creating an environment where lack of experience became an advantage rather than a limitation.

Rapid Iteration Mechanisms: DeepSeek's flat hierarchy enabled ideas to move from conception to implementation without traditional approval chains, creating an unprecedented speed of innovation that became its own form of competitive advantage.

Natural Collaboration Ecosystems: Rather than enforced teamwork, DeepSeek fostered an environment where collaboration emerged naturally around promising ideas, creating more effective and efficient research teams.

Resource Optimization Systems: The company developed sophisticated systems for allocating computing resources based on project potential rather than hierarchical position, ensuring maximum return on computational investment.

3. Cultural Factors

Question Everything Mindset: DeepSeek cultivated a culture where questioning fundamental assumptions was not just allowed but expected, leading to breakthroughs that might have been impossible in more traditional environments.

Efficiency-Driven Innovation: The company's Chinese cultural background contributed to a unique approach where efficiency wasn't just about cost-saving but became a driver of fundamental innovation.

Academic Environment Maintenance: Despite commercial pressures, DeepSeek maintained an academic-style research environment that fostered long-term thinking and fundamental innovation while still delivering practical results.

Innovation Priority Systems: The company developed sophisticated methods for identifying and supporting promising innovations, ensuring resources flowed to the most potentially transformative projects.

Creating Sustainable Advantages

1. Innovation Acceleration

Compounding Knowledge Effects: Each breakthrough at DeepSeek built upon previous innovations in a compounding manner, creating an accelerating cycle of improvement that became increasingly difficult for competitors to match.

Cultural Momentum: The company's success with unconventional approaches created a self-reinforcing culture of innovation that attracted more talented researchers interested in fundamental breakthroughs.

2. Efficiency Networks

Resource Multiplication Effect: DeepSeek's efficiency-focused innovations created a network effect where each improvement in resource utilization enabled more ambitious projects with existing resources.

Collaborative Optimization: The company's open-source approach created a growing network of external collaborators who contributed to both technical and efficiency improvements.

3. Knowledge Moats

Architectural Understanding: Deep knowledge of fundamental AI architectures, developed through original research, created a knowledge advantage that proved more valuable than raw computing power.

Implementation Expertise: The practical experience gained from implementing novel architectures created a growing body of institutional knowledge that became increasingly difficult to replicate.

This new understanding of moats in AI development suggests that sustainable competitive advantages come not from traditional resources or scale, but from creating self-reinforcing cycles of innovation, efficiency, and knowledge accumulation. DeepSeek's success demonstrates that the most effective moats are dynamic rather than static, built on the ability to consistently generate and implement new ideas rather than protect existing advantages.

Future Implications: Reshaping the AI Development Landscape

For Big Tech Companies

Embracing Architectural Innovation: Traditional tech giants must fundamentally rethink their approach to innovation, moving beyond incremental improvements to encourage revolutionary architectural changes. Companies like Google and OpenAI need to create environments where questioning fundamental assumptions isn't just permitted but actively encouraged.

Rethinking Organizational Structure: The success of DeepSeek's flat, academic-style organization suggests that traditional corporate hierarchies might be impediments to breakthrough innovation. Big tech needs to consider radical restructuring, potentially breaking down into smaller, more autonomous research units.

Valuing Fresh Perspectives: The industry must reconsider its emphasis on experience, creating paths for young talent to contribute to core research and development. This might mean establishing separate tracks for experienced engineers and fresh graduates, each with different objectives and success metrics.

Focus on Efficiency: Rather than competing primarily through scale, big tech companies need to prioritize efficiency in both resource usage and organizational structure. This means developing new metrics for success that go beyond model size or raw computing power.

For Open Source Communities

Focus on Fundamental Innovation: Open source developers should concentrate on architectural innovations rather than just implementing existing approaches. DeepSeek's success suggests that breakthrough innovations are possible even with limited resources.

Embracing Global Collaboration: The open source community needs to break down geographical and cultural barriers, creating truly global collaboration networks that can leverage diverse perspectives and approaches.

Prioritizing Efficiency: Rather than trying to match the scale of large tech companies, open source projects should focus on developing more efficient approaches to AI development, following DeepSeek's example.

Value New Approaches: The community needs to remain open to radically different approaches to AI development, creating spaces where unconventional ideas can be explored and tested.

For AI Development Methods

Questioning Core Assumptions: The field needs to continuously reassess fundamental assumptions about AI architecture and development. DeepSeek's success with MLA shows that even well-established approaches can be improved through fundamental innovation.

Balancing Theory and Practice: Future development should strike a balance between theoretical innovation and practical implementation, creating environments where new ideas can quickly move from concept to reality.

Resource Efficiency Focus: Development methods need to prioritize efficient resource usage, developing new architectures and training approaches that can achieve more with less.

Cross-Cultural Integration: AI development needs to better integrate different cultural approaches to innovation and problem-solving, creating more diverse and effective development methodologies.

Industry Evolution

New Competition Patterns: The industry is likely to see more competition from unexpected sources, particularly from companies and organizations that can effectively combine different cultural and organizational approaches.

Changing Resource Requirements: The relationship between computing resources and AI capability will continue to evolve, with efficiency innovations potentially reducing the importance of raw computing power.

Organizational Transformation: Successful AI development organizations will likely become more fluid and adaptable, with structures that can quickly reorganize around promising innovations.

Talent Development Changes: The industry will need to develop new approaches to identifying and nurturing talent, potentially moving away from traditional experience-based criteria.

Research Directions

Architecture Innovation: Future research will likely focus more on fundamental architectural innovations rather than scaling existing approaches.

Efficiency Optimization: Research into more efficient training methods and architectures will become increasingly important.

Cultural Integration: Studies of how different cultural approaches to innovation can be effectively combined will become more crucial.

Organizational Design: Research into optimal organizational structures for AI development will gain importance.

This transformation of the AI landscape suggests a future where success depends less on traditional advantages like computing resources or market position, and more on the ability to foster fundamental innovation and efficiently implement new ideas. The example of DeepSeek indicates that this future may arrive sooner than many expect, requiring rapid adaptation from existing players in the field.

Conclusion

The Google memo was prophetic but missed a crucial point: the future of AI wouldn't just be about catching up through open source, but about fundamentally reimagining how AI development happens. DeepSeek's success shows that the real moat isn't resources, experience, or even iteration velocity alone – it's the ability to question fundamental assumptions and create new paradigms.

As we move forward, the industry must recognize that:

Innovation can come from anywhere

Fresh perspectives matter more than experience

Efficiency trumps scale

Open collaboration beats secrecy

The "no moat" theory proved correct, but the reality is more complex: while traditional moats may not exist, new ones are being built through innovation, efficiency, and fresh perspectives. The future belongs not to those who can build the biggest models, but to those who can reimagine how AI development happens.

#AIInnovation #OpenSourceAI #FutureOfTech #DeepSeek #TechStrategy

The question is if Berkeley scientist are able to do a R1 based on R1 Zero for 30$ and simple hardware, what they will be able to do with the most powerful NVIDIA architecture. I believe we are just at the beginning of the ASI...