DeepSeek's New Open Source AI Model Challenges Industry Leaders, Shows 31% Jump in Coding Ability

In a significant development for open-source AI, DeepSeek AI has released DeepSeek-V3-Base, their latest Mixture of Experts (MoE) language model. This release marks a substantial leap forward in open-source AI capabilities, particularly in programming tasks, where it demonstrates performance approaching that of proprietary industry leaders.

Technical Architecture: A Deeper Look

DeepSeek-V3-Base represents a sophisticated implementation of the MoE architecture, featuring 685 billion parameters distributed across 256 expert networks. What makes this architecture particularly interesting is its use of a sigmoid routing mechanism that activates only the top 8 experts for any given input, creating a highly efficient sparse activation pattern.

The model's architecture shows significant improvements over its predecessor, with substantial scaling across all key parameters. To put this evolution in perspective, let's examine the key architectural changes that have driven this performance leap:

The vocabulary has been expanded from 102,400 to 129,280 tokens, allowing for more nuanced language understanding. The hidden layer size has grown from 4,096 to 7,168, while the intermediate layer size has increased from 11,008 to 18,432. Perhaps most significantly, the number of hidden layers has more than doubled from 30 to 61, and attention heads have quadrupled from 32 to 128.

Real-World Performance and Capabilities

Early adopters have already begun testing the model through DeepSeek's API, with the interface clearly indicating the transition to V3. The chat interface has been updated to reflect these changes, providing users with direct access to the improved capabilities.

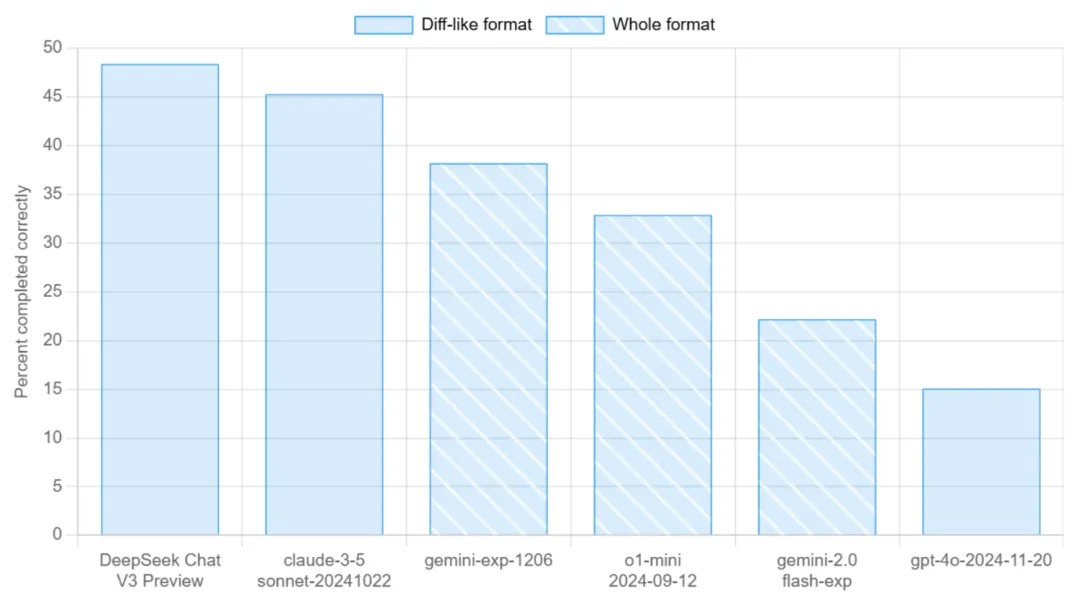

In recent evaluations using the Aider multilingual programming benchmark, DeepSeek-V3-Base has demonstrated remarkable capabilities. The benchmark, which tests models across 225 challenging programming problems in languages including C++, Go, Java, JavaScript, Python, and Rust, showed DeepSeek-V3-Base achieving a 48.4% success rate—a dramatic 31% improvement over its predecessor's 17.8%.

LiveBench Results: Comprehensive Evaluation

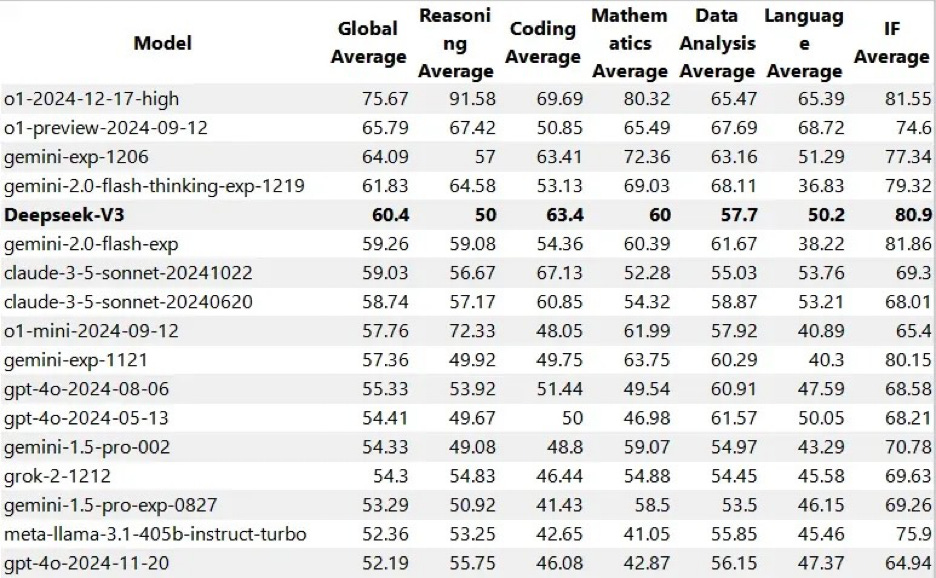

The preliminary LiveBench results paint an impressive picture of DeepSeek-V3-Base's capabilities across multiple domains. The model has shown strong performance in:

General reasoning tasks

Programming challenges

Mathematical computation

Data analysis

Language processing

Information retrieval

These results position DeepSeek-V3-Base as a serious competitor to established commercial models, including Gemini 2.0 Flash and Claude 3.5 Sonnet.

Community Response and Future Implications

The AI community has responded with enthusiasm to this release. Many researchers and practitioners have noted that DeepSeek-V3-Base represents a significant step forward for open-source AI, with some suggesting it could serve as a viable alternative to proprietary models like Claude 3.5.

Looking ahead to 2025, the rapid advancement of open-source models like DeepSeek-V3-Base suggests we're entering a new era of AI democratization. The narrowing gap between open-source and proprietary systems could accelerate innovation and increase accessibility across the field.

For developers and researchers interested in exploring DeepSeek-V3-Base, the model is available for download on HuggingFace, opening new possibilities for AI development and implementation.

#AI #MachineLearning #OpenSourceAI #DeepLearning #AIResearch