DeepSeek V3 vs Claude 3.5 Sonnet: A Head-to-Head Comparison of AI Giants

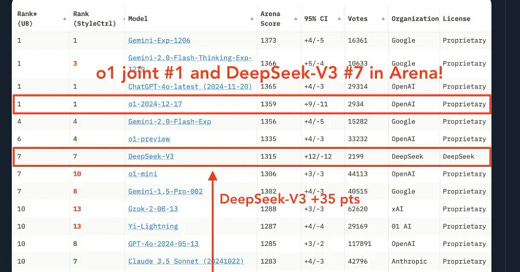

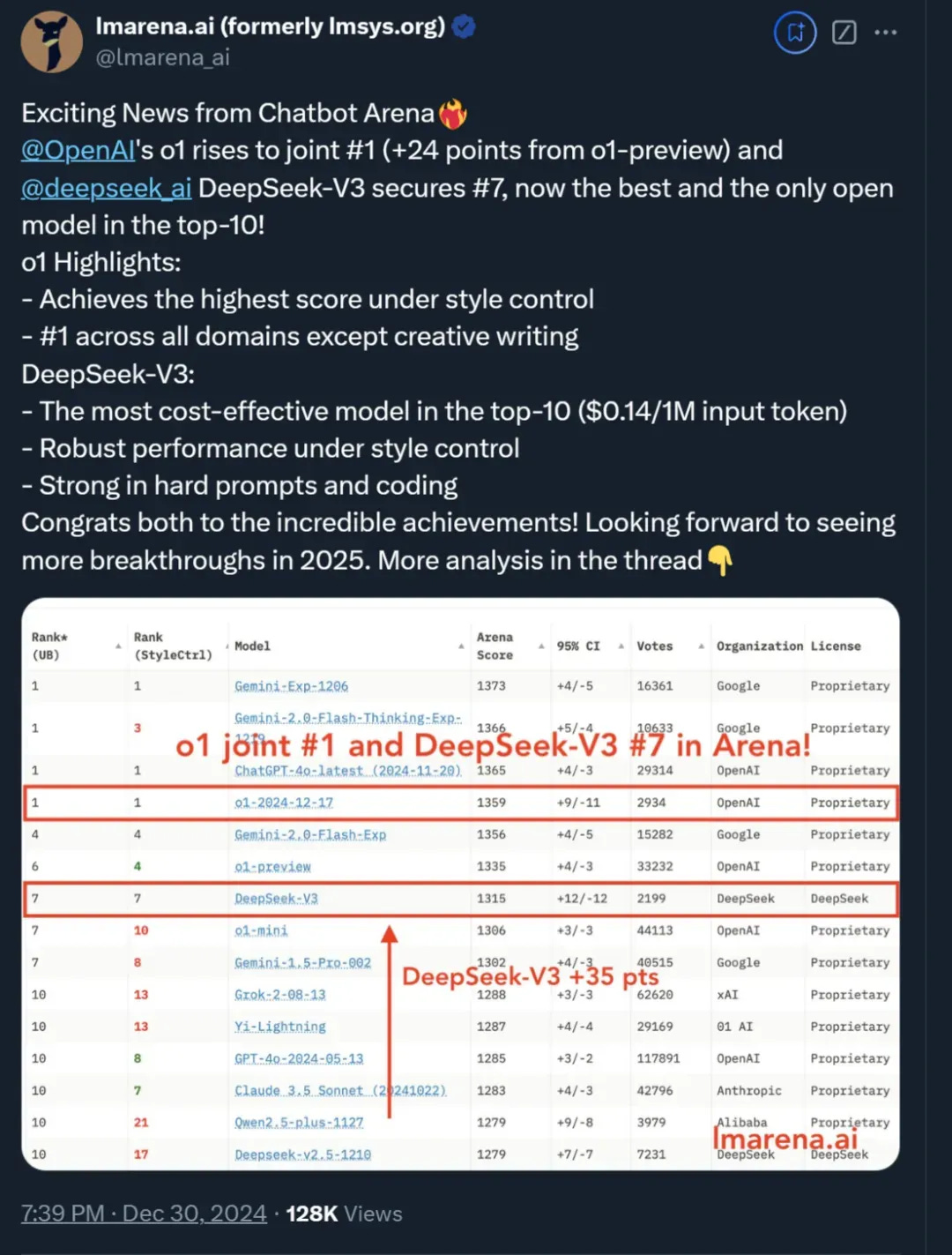

In a significant development for open-source AI, DeepSeek V3 has emerged as the strongest open-source model in recent arena rankings, surpassing o1-mini and becoming the only open-source model to break into the top 10. Let's dive into a detailed comparison with Claude 3.5 Sonnet through real-world testing.

Performance Overview

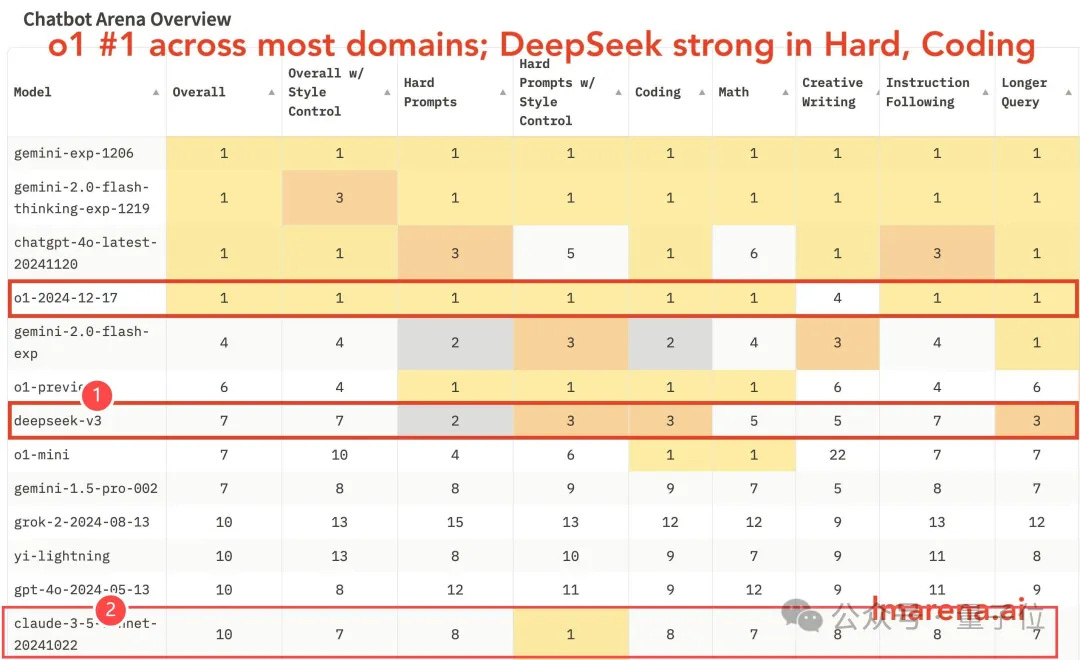

DeepSeek V3 has demonstrated impressive capabilities, particularly excelling in:

Complex prompts

Programming tasks

Mathematical problems

Creative writing

However, when style controls are implemented (removing the model's tendency to provide lengthy, well-formatted responses to appeal to human preferences), Claude 3.5 Sonnet maintains a slight edge in understanding complex prompts.

Real-World Testing Results

1. Basic Comprehension Test

In a simple riddle test about family relationships:

DeepSeek V3: Provided correct answer with detailed logical reasoning

Claude 3.5 Sonnet: Gave accurate, concise response

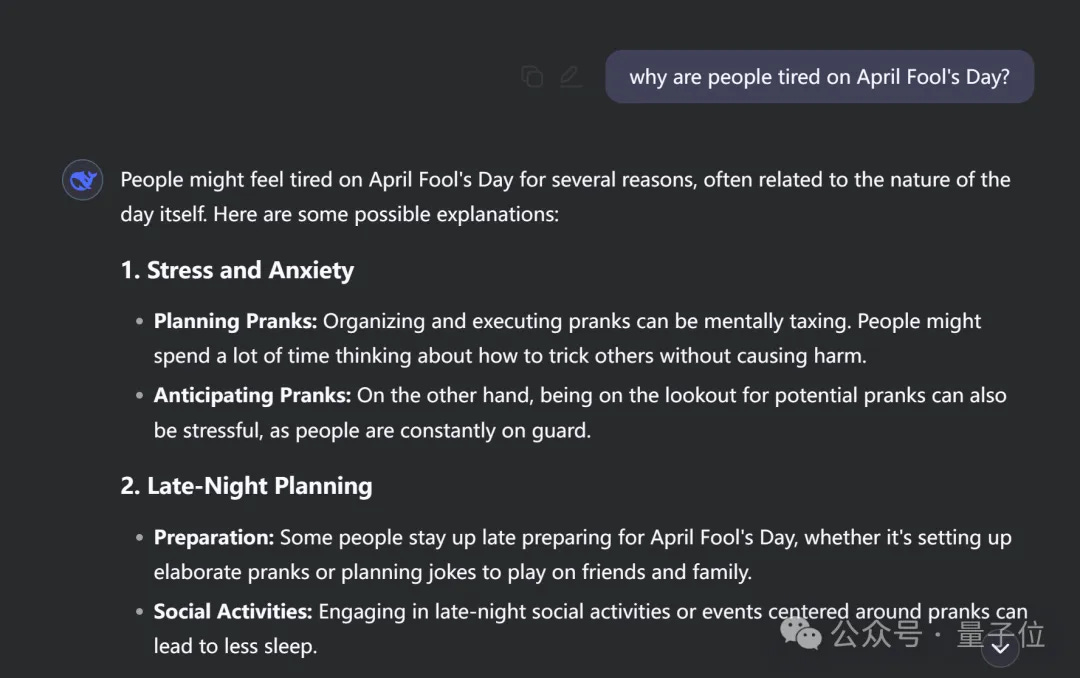

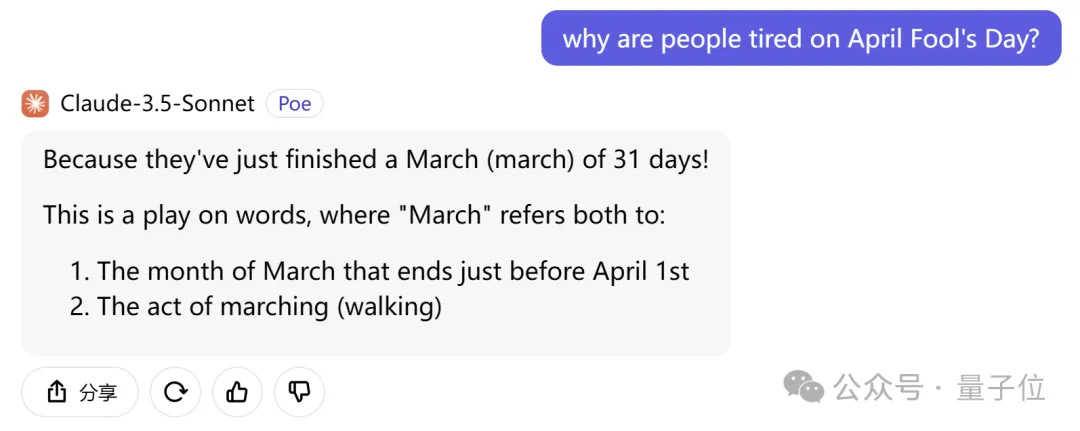

However, when tested with an English wordplay riddle ("April Fool's Day" question):

DeepSeek V3: Missed the wordplay, provided literal interpretation

Claude 3.5 Sonnet: Successfully understood and explained the pun

2. Logic and Reasoning

Both models were tested with challenging logic puzzles:

Trap Logic Question: Both models struggled with intentionally misleading questions, showing that even advanced AI can fall for logical traps designed to trick humans.

Knowledge Association Test: Both models successfully identified Tom Cruise as Mary Lee Pfeiffer's son, demonstrating strong factual knowledge capabilities.

3. Mathematical Abilities

When presented with a graduate-level mathematics problem involving surface integrals and Gauss's theorem:

DeepSeek V3: Provided detailed, step-by-step solution and arrived at the correct answer

Claude 3.5 Sonnet: Offered a simpler approach but reached an incorrect conclusion

4. Programming Capabilities

A practical test involving website creation in Scroll Hub showed DeepSeek V3 performing notably well:

More efficient code generation

Better understanding of development requirements

Superior overall implementation

Market Impact and Future Implications

This comparison comes at an interesting time in the AI landscape, with OpenAI's o1 model also making waves by:

Claiming the top position in overall rankings

Leading in most individual categories except creative writing

Scoring 24 points higher than o1-preview

Analysis and Insights

DeepSeek V3's performance demonstrates several key points:

Open-source models are rapidly catching up to proprietary systems

Different models show distinct strengths in various domains

Cultural context affects AI performance (as seen in the language-specific riddles)

Technical tasks like mathematics and programming may be more reliable metrics for comparison than general language understanding

Practical Applications

The comparative strengths of each model suggest different optimal use cases:

DeepSeek V3: Technical tasks, programming, mathematics

Claude 3.5 Sonnet: Natural language understanding, context-aware responses

Both: General knowledge and logical reasoning tasks

Looking Forward

This comparison highlights the rapid advancement of AI capabilities, particularly in the open-source domain. DeepSeek V3's ability to compete with and sometimes surpass established commercial models suggests a shifting landscape in AI development and accessibility.

For developers and users, these results indicate:

Increasing viability of open-source alternatives

Need for task-specific model selection

Importance of considering cultural and linguistic contexts

Potential for combining different models' strengths in practical applications

As the AI field continues to evolve, such comparisons provide valuable insights into the current state of AI capabilities and the growing competition between open-source and proprietary models.