DeepSeek Kicks Off Open Source Week with FlashMLA: A Game-Changing GPU Optimization for AI

On the first day of its highly anticipated Open Source Week, DeepSeek has made a significant contribution to the AI development community by releasing FlashMLA, a specialized MLA (Multi-head Latent Attention) decoding kernel designed specifically for NVIDIA Hopper GPUs. This release represents a tangible step toward democratizing high-performance AI technology and addresses several critical bottlenecks in large language model inference.

FlashMLA: Breaking Performance Barriers

FlashMLA is built from the ground up to optimize memory usage and computational efficiency for large language model (LLM) inference. According to DeepSeek's benchmarks, FlashMLA achieves impressive performance metrics on H800 SXM5 platforms running CUDA 12.6:

Up to 3000 GB/s throughput in memory-constrained configurations

Peak performance of 580 TFLOPS in compute-constrained scenarios

These numbers reflect significant improvements over standard implementations, potentially allowing organizations to extract maximum value from their existing GPU infrastructure—a critical consideration given the ongoing scarcity and high cost of advanced AI accelerators.

The current release includes support for BF16 precision and implements 64-block sized paged KV cache, optimizing for variable-length sequences that are common in real-world LLM applications. DeepSeek acknowledges that FlashMLA builds upon previous innovations, citing inspiration from FlashAttention-2, FlashAttention-3, and NVIDIA's CUTLASS library.

Community reaction has been overwhelmingly positive, with many developers praising DeepSeek's choice to begin their open source week with such a foundational technology. One enthusiast remarked that "DeepSeek starts with a trump card; FlashMLA is truly capable of accelerating the AGI process."

Practical Implementation

Github: https://github.com/deepseek-ai/FlashMLA

For developers looking to integrate FlashMLA into their workflows, DeepSeek has provided straightforward installation and testing procedures. The library can be installed via a standard Python setup process:

python setup.py installPerformance verification can be conducted using the included benchmark suite:

python tests/test_flash_mla.pyImplementation in production code follows a relatively straightforward pattern, with the library providing two primary functions: get_mla_metadata for configuration and flash_mla_with_kvcache for the actual attention computation:

from flash_mla import get_mla_metadata, flash_mla_with_kvcache tile_scheduler_metadata, num_splits = get_mla_metadata( cache_seqlens, s_q * h_q // h_kv, h_kv )

for i in range(num_layers):

o_i, lse_i = flash_mla_with_kvcache(

q_i, kvcache_i, block_table, cache_seqlens, dv,

tile_scheduler_metadata, num_splits, causal=True,

)The Technology Behind DeepSeek's Efficiency

FlashMLA represents just one component of DeepSeek's broader technological strategy that has enabled them to develop high-performance models with remarkably lower training costs than competitors. This approach combines two key innovations: Mixture of Experts (MoE) architecture and Multi-head Latent Attention (MLA).

Multi-head Latent Attention (MLA)

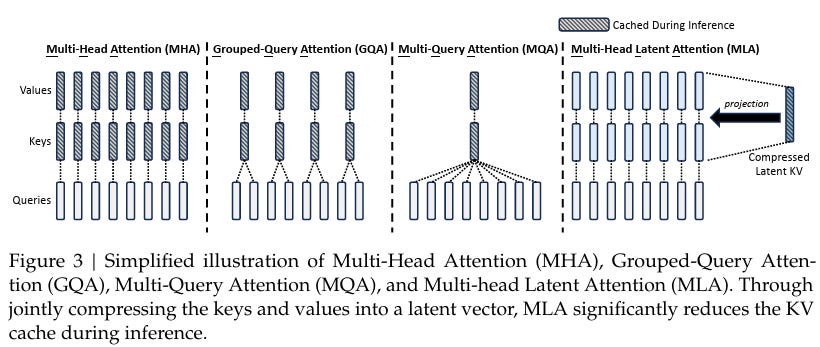

The development of MLA was a months-long effort that has yielded dramatic reductions in memory requirements. According to DeepSeek, MLA reduces the KV cache requirements for each query by approximately 93.3%—a substantial improvement that directly translates to reduced memory consumption during both inference and training.

KV cache is a critical memory mechanism in Transformer models, storing data that represents conversation context to avoid redundant computations. As conversation contexts grow longer, standard attention mechanisms cause KV cache sizes to balloon, creating significant memory constraints. By drastically reducing the KV cache requirements per query, MLA enables more efficient hardware utilization and lower operational costs.

The implementation of MLA requires sophisticated design choices that substantially increase implementation complexity. DeepSeek's successful integration of this technology demonstrates their position at the forefront of efficient language model development. This architecture is particularly beneficial for processing long contexts, with memory usage reductions of approximately 80-90% compared to standard attention mechanisms.

DeepSeek has also leveraged the specific advantages of the H20 GPU architecture, which offers higher memory bandwidth and capacity than H100 chips, further enhancing efficiency gains for inference workloads.

Beyond MLA: DeepSeek's Broader Innovations

While FlashMLA represents an important advancement, it's just one part of DeepSeek's comprehensive approach to efficient AI development. Their recent V3 model incorporates several other breakthrough innovations:

Multi-Token Prediction (MTP)

DeepSeek V3 implements Multi-Token Prediction at an unprecedented scale. Unlike traditional next-token prediction approaches, MTP enables the model to predict multiple upcoming tokens simultaneously. This attention module significantly enhances training performance and can be removed during inference stages—a classic example of algorithmic innovation that delivers performance improvements with lower computational requirements.

Mixture of Experts (MoE) Architecture

DeepSeek V3 employs the increasingly popular MoE architecture, which combines multiple specialized expert models into a larger system capable of demonstrating emergent abilities. The primary challenge with MoE models is determining which tokens should be routed to which expert submodels.

DeepSeek's innovation comes in the form of an efficient "gating network" that balances token routing across experts without degrading model performance. This design ensures that routing is highly efficient, requiring adjustment of only a small fraction of parameters (relative to the overall model size) for each token during training. The result is improved training efficiency and reduced inference costs.

Some have expressed concern that the efficiency gains from MoE architectures might reduce investment incentives in the field. However, industry experts counter that the economic benefits of more powerful AI models are so substantial that any cost savings are immediately reinvested in developing even larger models, ultimately accelerating the scaling process rather than diminishing overall investment.

Precision and Efficiency Trade-offs

DeepSeek's adoption of FP8 precision for training represents another optimization, although this technique has been employed by leading research labs for some time. The balance between precision and computational efficiency is a crucial consideration in modern AI development, with lower precision formats offering significant performance advantages when implemented correctly.

From V3 to R1: The Power of Reinforcement Learning

DeepSeek's R1 model builds upon the strong foundation established by V3, with reinforcement learning (RL) playing a crucial role in its enhanced capabilities. The RL approach focuses on two key aspects:

Formatting improvements to ensure output coherence

Usefulness and safety enhancements to make the model practical and harmless

Interestingly, the model's reasoning abilities emerged naturally during the fine-tuning process on synthetic datasets, similar to the development pattern observed in OpenAI's o1 model. The R1 paper notably omits specific computation figures, as revealing the computational resources used would indirectly disclose that DeepSeek's actual GPU fleet exceeds their publicly stated numbers. The scale of reinforcement learning required, particularly for synthetic data generation, necessitates substantial computing power.

The Promise of Distillation

Perhaps the most striking revelation from the R1 paper is the effectiveness of distillation—using the outputs from reasoning-capable models to fine-tune smaller, non-reasoning models and imbue them with reasoning abilities. The dataset constructed for this purpose contains approximately 800,000 samples.

This approach opens exciting possibilities for the wider AI community, as researchers can now leverage R1's Chain of Thought (CoT) outputs to create their own datasets and develop reasoning-capable models. In the future, we might see many smaller models exhibiting enhanced reasoning capabilities, elevating the overall performance of more compact AI systems.

Implications for the AI Ecosystem

DeepSeek's decision to open source FlashMLA as the first component of their Open Source Week has several important implications for the broader AI ecosystem:

Democratization of AI Resources

By sharing optimized implementations that extract maximum performance from existing hardware, DeepSeek is helping to address one of the most significant barriers to AI research and development: computational efficiency. This is particularly valuable for smaller organizations and academic institutions that cannot afford to continually upgrade their hardware infrastructure.

Advancing Open Source AI

The release aligns with a growing movement toward more open and collaborative AI development. While some leading AI labs have moved toward increasingly closed models, DeepSeek's commitment to sharing not just model weights but also critical implementation details and optimizations represents a different philosophy—one that prioritizes collective advancement over proprietary advantage.

Setting Industry Standards

By beginning their Open Source Week with such a fundamental technology, DeepSeek establishes a high bar for meaningful open source contributions in the AI space. Rather than releasing peripheral tools or limited models, they've chosen to share technology that directly addresses core challenges in the field.

Looking Forward

As DeepSeek's Open Source Week continues, the AI community eagerly anticipates the remaining four repositories promised for release. If FlashMLA is any indication, these forthcoming contributions may include additional optimizations, training methodologies, or architectural innovations that have enabled DeepSeek to achieve impressive results with relatively limited resources compared to industry giants.

The strategic decision to open source these technologies suggests a recognition that advancing the field of artificial general intelligence requires collaborative effort. By sharing their innovations, DeepSeek not only builds goodwill within the developer community but potentially accelerates the pace of overall advancement in AI capabilities—a rising tide that lifts all boats.

For developers and researchers currently working with large language models, FlashMLA represents an immediately valuable contribution that could enhance performance and reduce costs in existing projects. As the remaining repositories are released throughout the week, we may see a more complete picture emerge of how these individual components work together to form DeepSeek's efficient and powerful AI stack.

DeepSeek's Open Source Week has begun with a clear statement of intent: to share substantive, production-tested tools that address fundamental challenges in AI development. With FlashMLA, they've demonstrated a commitment to transparency and collaboration that stands to benefit the entire field. As the week progresses, the AI community stands to gain even more insights into the methodologies and technologies that have enabled DeepSeek to achieve remarkable efficiency in their pursuit of advanced artificial intelligence.