Berkeley Researchers Replicate DeepSeek R1's Core Tech for Just $30: A Small Model RL Revolution

A Berkeley AI Research team led by PhD candidate Jiayi Pan has achieved what many thought impossible: reproducing DeepSeek R1-Zero's key technologies for less than the cost of a dinner for two. Their success in implementing sophisticated reasoning capabilities in small language models marks a significant democratization of AI research.

Key achievements:

Successful replication under $30

Complex reasoning in small models (1.5B parameters)

Performance comparable to larger systems

The Core Innovation

Using the countdown game as their testing ground, the team demonstrated that even modest language models can develop complex problem-solving strategies through reinforcement learning. Their implementation showed models evolving from random guessing to sophisticated search and self-verification techniques.

Size and Algorithm Insights

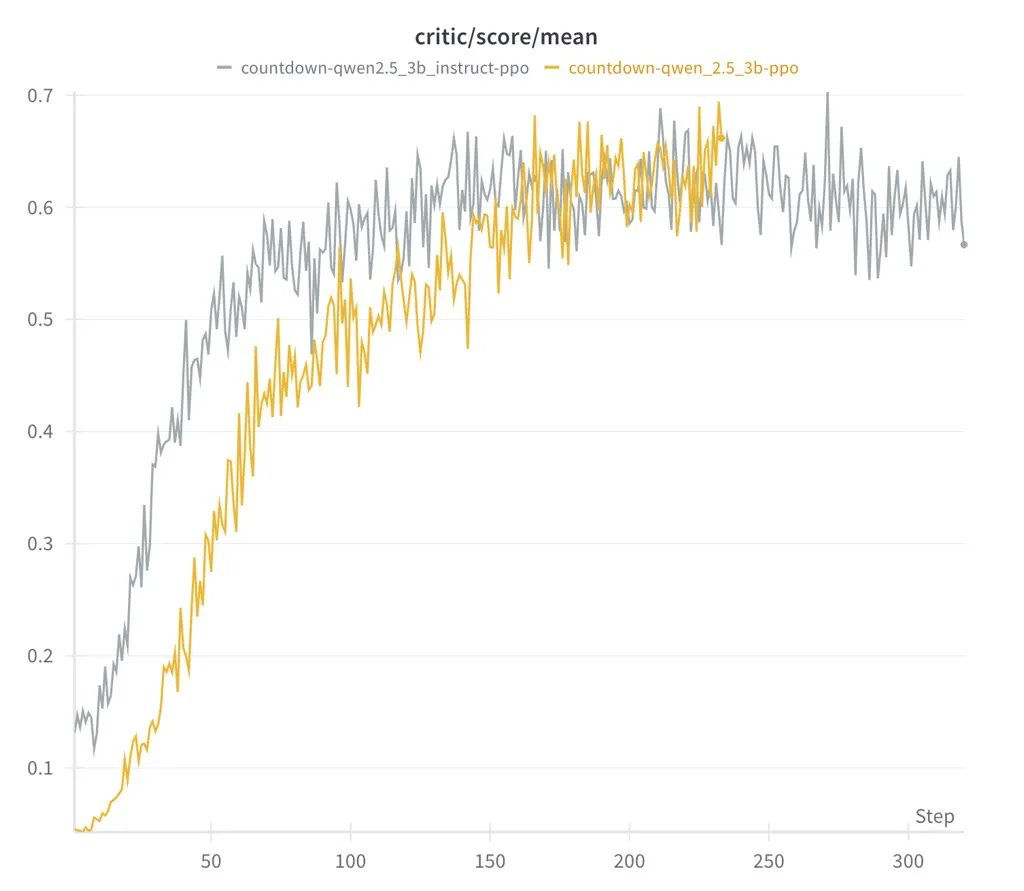

The research revealed that while the smallest 0.5B model could only manage basic guessing, models from 1.5B upward demonstrated remarkable problem-solving capabilities. Surprisingly, the choice of reinforcement learning algorithm (PPO, GRPO, or PRIME) proved less critical than expected, with all approaches achieving similar results.

Task-Specific Intelligence

One of the most fascinating discoveries was how models developed distinct problem-solving strategies for different tasks. In the countdown game, they mastered search and self-verification. For multiplication problems, they learned to apply the distributive law. This suggests AI systems develop specialized rather than general problem-solving approaches.

The Bigger Picture

This research carries echoes of the Transformer revolution, democratizing access to cutting-edge AI technology. With the entire project costing less than $30 and all code available on GitHub, it opens doors for researchers worldwide to contribute to AI advancement.

Richard Sutton, the father of reinforcement learning, would likely find vindication in these results. They align with his vision of continuous learning as the key to AI advancement, demonstrating that sophisticated AI capabilities can emerge from relatively simple systems given the right learning framework.

This work from a Chinese AI research company may well mark a turning point in AI development, proving that groundbreaking advances don't require massive resources – just clever thinking and the right approach.

#AIResearch #DeepSeek #ReinforcementLearning #OpenSourceAI #MachineLearning

Your substack did NOT link to the original authors which is NOT acceptable!

Let's give them credit!

GitHub: https://github.com/Jiayi-Pan/TinyZero

Source on X: https://x.com/jiayi_pirate/status/1882839370505621655

I'm an investor, trying to understand what effect this might have on business. Will research like this lead to development at low cost of specialised AI-based applications to do specific tasks like monitor processes, answer questions from members of the team and the public, spot accounting anomalies, etc? Or is that already happening and I just don't know about it?